In the fall semester of my last year at Monroe Community College I collaborated with a few of the student over at its Information and Computer Technology Club. We came up with the idea of working on a project to control a drone using a camera. This camera would be there to read the users hand gestures and direct the drone based on the given direction.

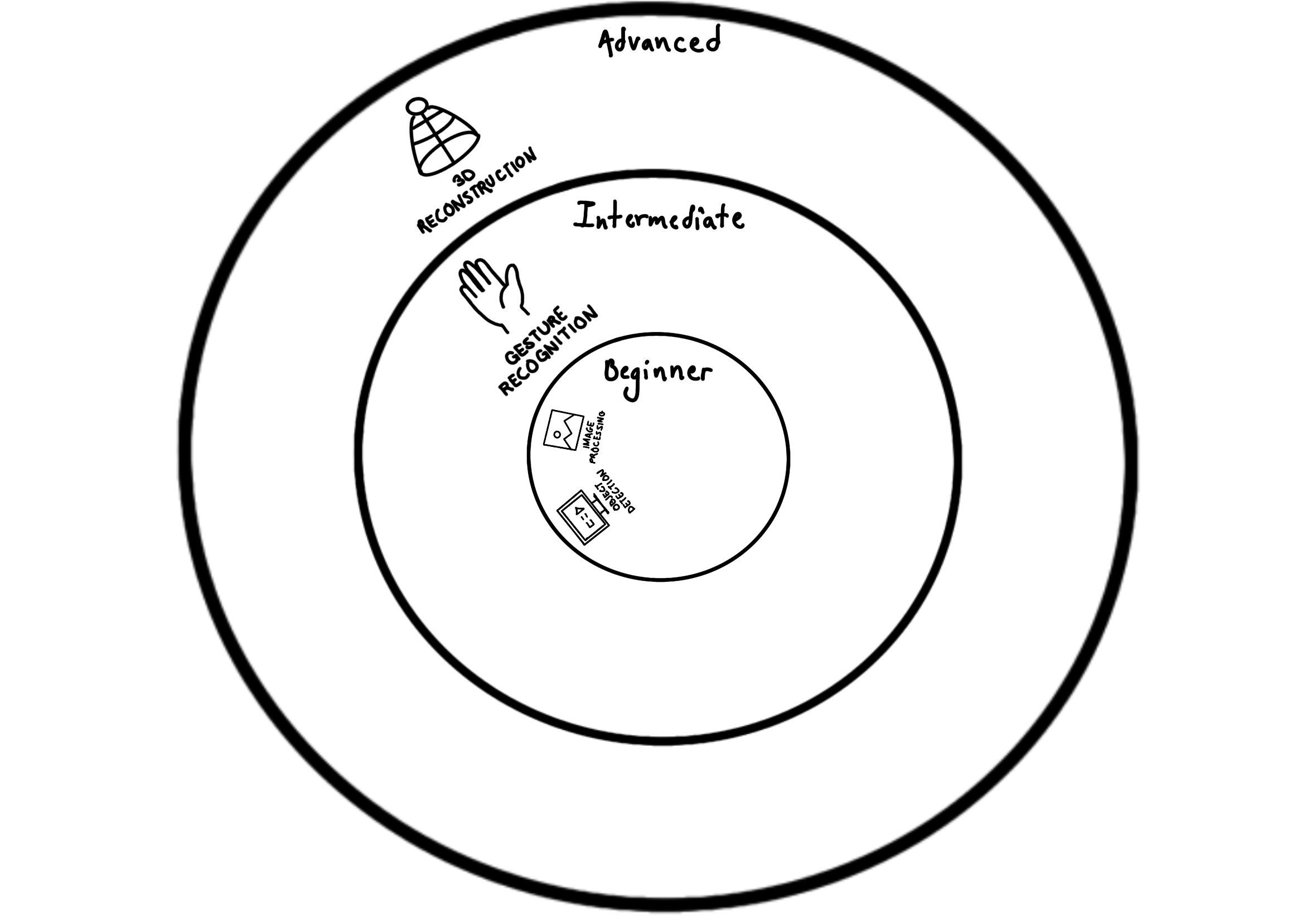

The process of bringing this idea to life took the span of two semesters. Over the course of that time the project made many pivots in approach. We started off very ambitious so the project over time also narrowed in scope. Looking at the idea abstractly as it evolved over time, making it come to life involved a lot of simplifying.

We started by thinking of building a drone from scratch then programming it. We then moved on to using an already built drone so we could focus solely on programming. When programming we started of with the idea of building and training a custom model to detect different hand gestures. The most significant milestone occurred when we opted towards using an open source model freely available online.

That open source model was mediapipe. With mediapipe we had a model that did not just detect the hand of the user. It could also point out different segments of that hand. It would classify the different segments as landmarks. These landmarks made it possible to use maths that could then read hand orientations efficiently enough to steer a drone using a touch less interface.

Link to demonstartion: Gesture Drone Demo